Project AICON

Phase 1 – Raster Icon Creation

Using Deep Learning via Text-Generating Neural Network

Using Deep Learning via Text-Generating Neural Network

Idea of the Project

Use text-generating neural network (textgenrnn, a Python package based on frameworks, like TensorFlow and Keras) to generate icons.

Longterm goals

Use machine learning to generate an icon based on photo(s) or video.

Short term goals

– Prove that text-generating neural network could be used to create images

– Learn the limitations of this method

– Compare results with purely randomly generated icons

Method

First, convert bunch of images into textual form, appropriate for the text-generating neural network, basically turning them into letters, words and sentences. Then, train neural network on this set of images/sentences. And finally, generate new images/sentences.

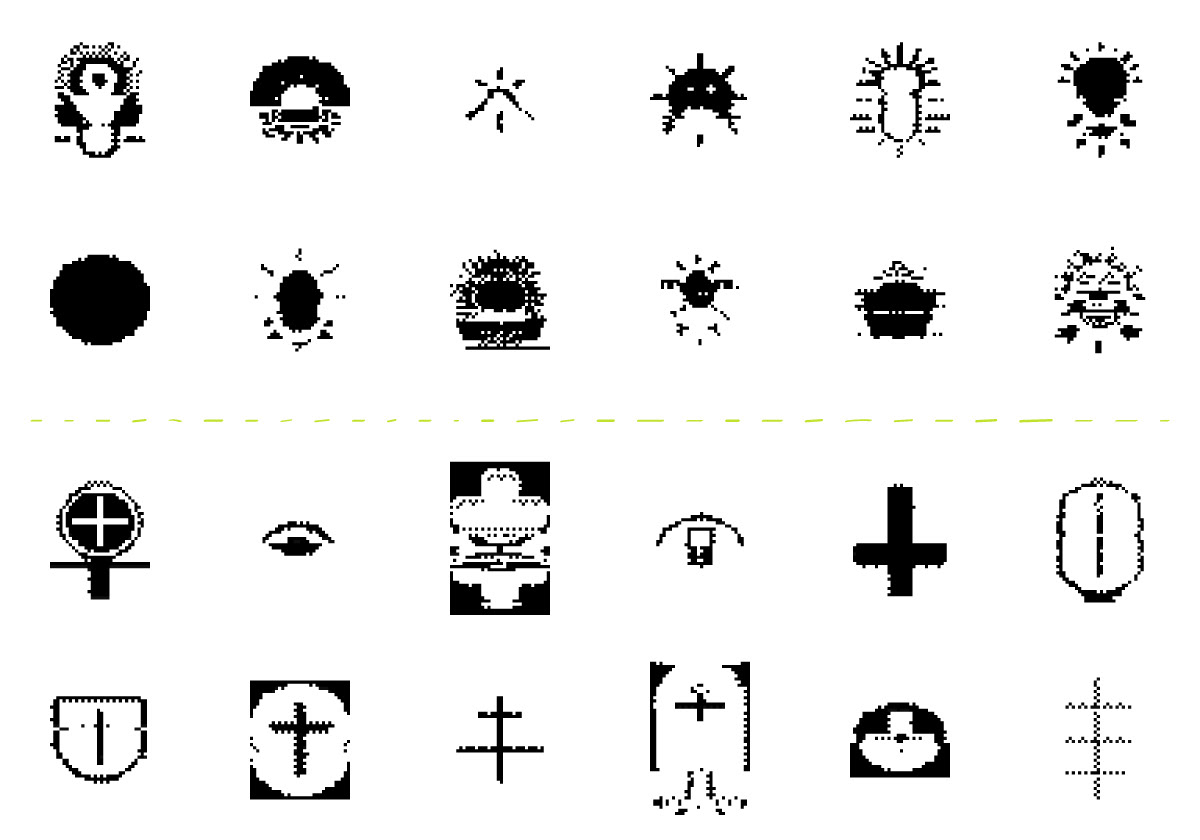

Some of the strange and familiar final results of the raster phase. Sun (top two rows) and plus (bottom) icons generated by two different neural networks.

Image Encoding

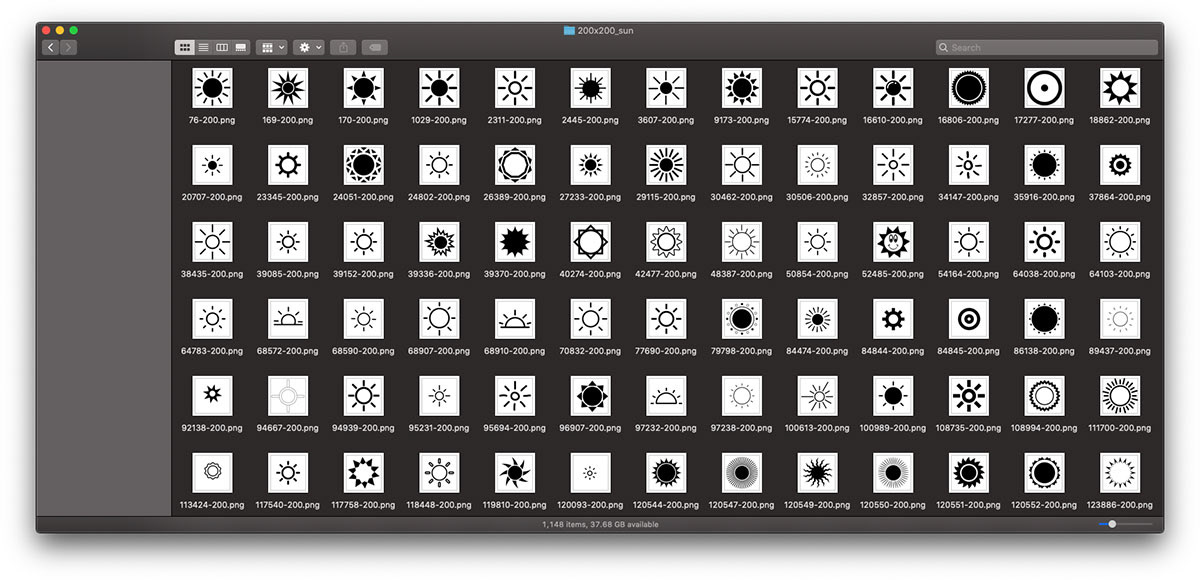

For this first test I wanted to see if “AI” (I will be referring to neural networks I worked with as AI) could actually sense an image through words. So I downloaded all the icons named “Sun” from The Noun Project and after removing weird and misleading images I settled on 1148 PNG files.

Some of the 1148 PNG images downloaded from The Noun Project

To simplify the test and to minimize the length of the resulting sentences I batch processed PNGs in Photoshop using custom-made action. All of the files were scaled down from 200x200 pixels to 32x32 and converted into GIF format using only two colors, black and white. Since icons were reduced in quality and lost shades of grey they became rough but still pretty recognizable.

Some of the 32x32 pixel GIF images that the AI was trained on

The 32x32 GIF files contained only black and white pixels so I so I wrote Python script and converted each image to a binary string 1024 symbols long.

Binary representation of one of the 32x32 pixel GIF image

After trying training the AI with binary sentences and failing at getting any usable results I decided to simplify (textify) things a bit more and converted binary string into it’s hexadecimal (hex) equivalent. Additionally I separated each encoded line of pixels with spaces making it essentially a word.

Hex representation of the same image

In the end each image was represented by a line of 32 words. Each word contained 8 letters based on the hexadecimal “alphabet” (0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F). And there were 1148 sentences in total ready to be deep lerened by the AI.

Part of the list of 1148 sentences describing 1148 sun icons that the AI was trained on

The whole process of encoding looked something like this:

Machine Learning

To train the AI, I used Colaboratory, a notebook environment which uses virtual machine running neural network. It does not take a lot of time or effort to start using it. Check out this article, explaining how to start with machine learning: https://minimaxir.com/2018/05/text-neural-networks/

Neural network Interface

After the training was done I went on and generated several sets of text, 1000 new lines each representing sun icons. Each set was generated using different value for the temperature (AKA creativity) parameter. It ranges from 1 to 0 and affects the unexpectedness of the results. Changing the temperature can result in wildly different outputs.

Results generated based on temperature value of 1 (left) and 0.3 (right)

Instead of converting generated text into actual GIF images (which would have taken me some time to program in Python) I converted hex sentences back into binary lines then created a simple font (using the first online tool I could find: https://fontstruct.com/) that was used to display ones and zeros. This special font had black squares for the 1 and mostly empty space for the 0. So, in the end the binary code was shown almost as pixels. Colors were inverted in order to see the edges of the images on the white background without actual need for borders.

Same binary code shown with the Courier (left) and custom made ZerOne font (right)

Results

The AI correctly assumed that each word should be 8 symbols long, however, it generated sentences with different number of words unlike the original text. Not sure if this is due to the nature of the software or I just missed some parameters but overall this was understandable since, sentences in most of the real languages are usually different lengths.

Part of the results generated with creativity/temperature equals 1

AI generated “sun” icons with creativity set to 1 (left) and 0.3 (right)

Most of the resulting images are not exact copies of the originals fed to the AI but they still in some way represent the original idea. In this case the sun. Additionally I did similar experiments with the plus icon using the smaller set of images which also resulted in somewhat acceptable “icons” of the plus symbol and some interesting new variations created based on the rules the AI was able to assume.

AI generated “plus” icons with creativity set to 1 (left) and 0.3 (right)

In general I think the AI was able to grasp the idea of how to generate new text that represents the original icon. It puts words with less pixel information (less zeros which represent black color in original GIFs) at the beginning and end of the sentence and tries to stick “dense” words in the middle. Same with the plus symbol; the AI clearly “understood” vertical and horizontal lines.

There is still a need to crosscheck the AI’s results against some totally random algorithm but I bet AI would win.

I consider this test a partial success and moving onto testing what could be done with machine learning in terms of vector images.

Stay tuned : )